Reshape Health Grant Winner

AI & Design to Advance Health Equity

Discover how we supported Alvee in advancing their platform to identify and measure health disparities, predict and track social needs, leverage data with real-time insights, and promote health equity.

The vision

Alvee is an AI-driven health equity data management platform that helps practices and health systems take every opportunity to advance health equity and anticipate their patients’ social needs.

The Transformation

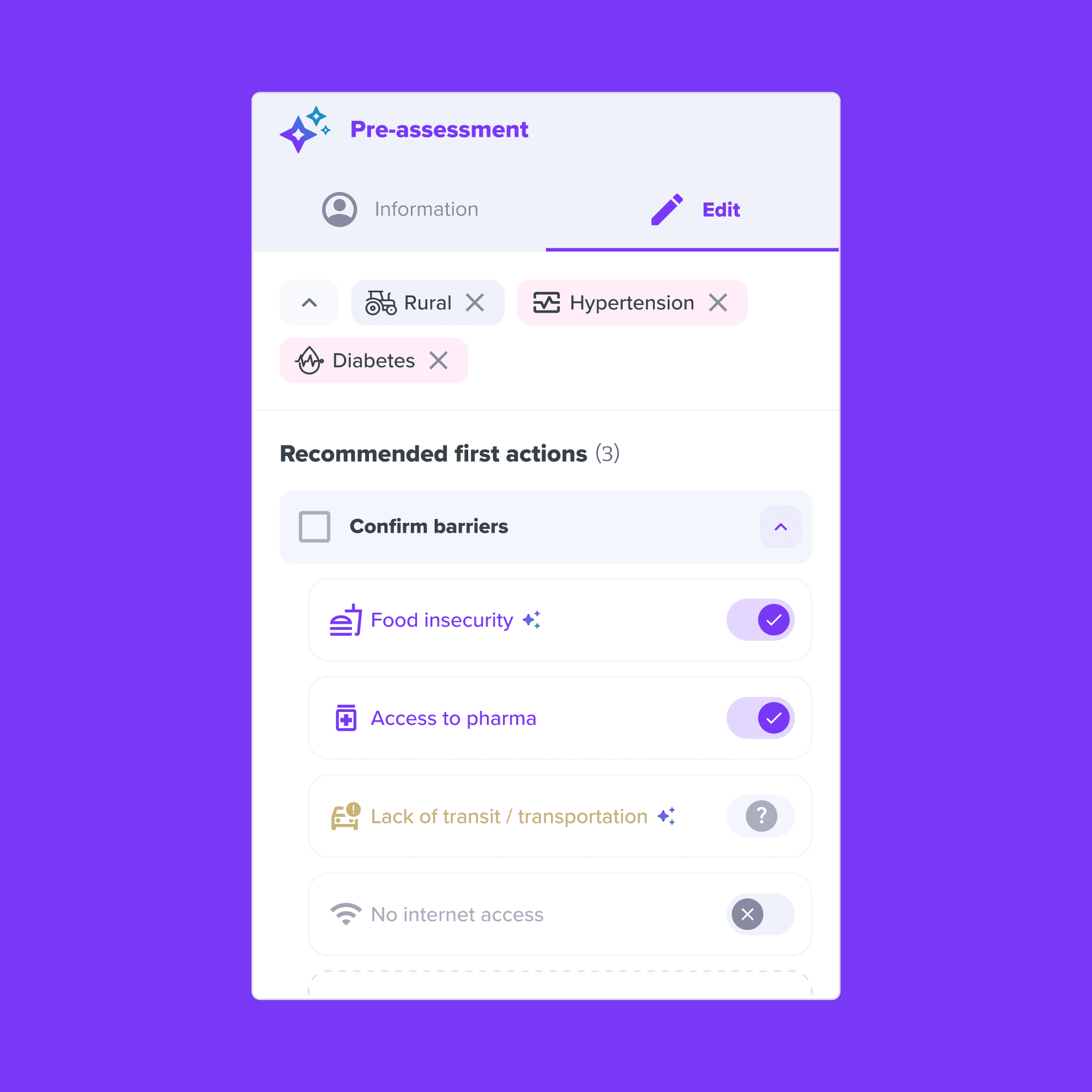

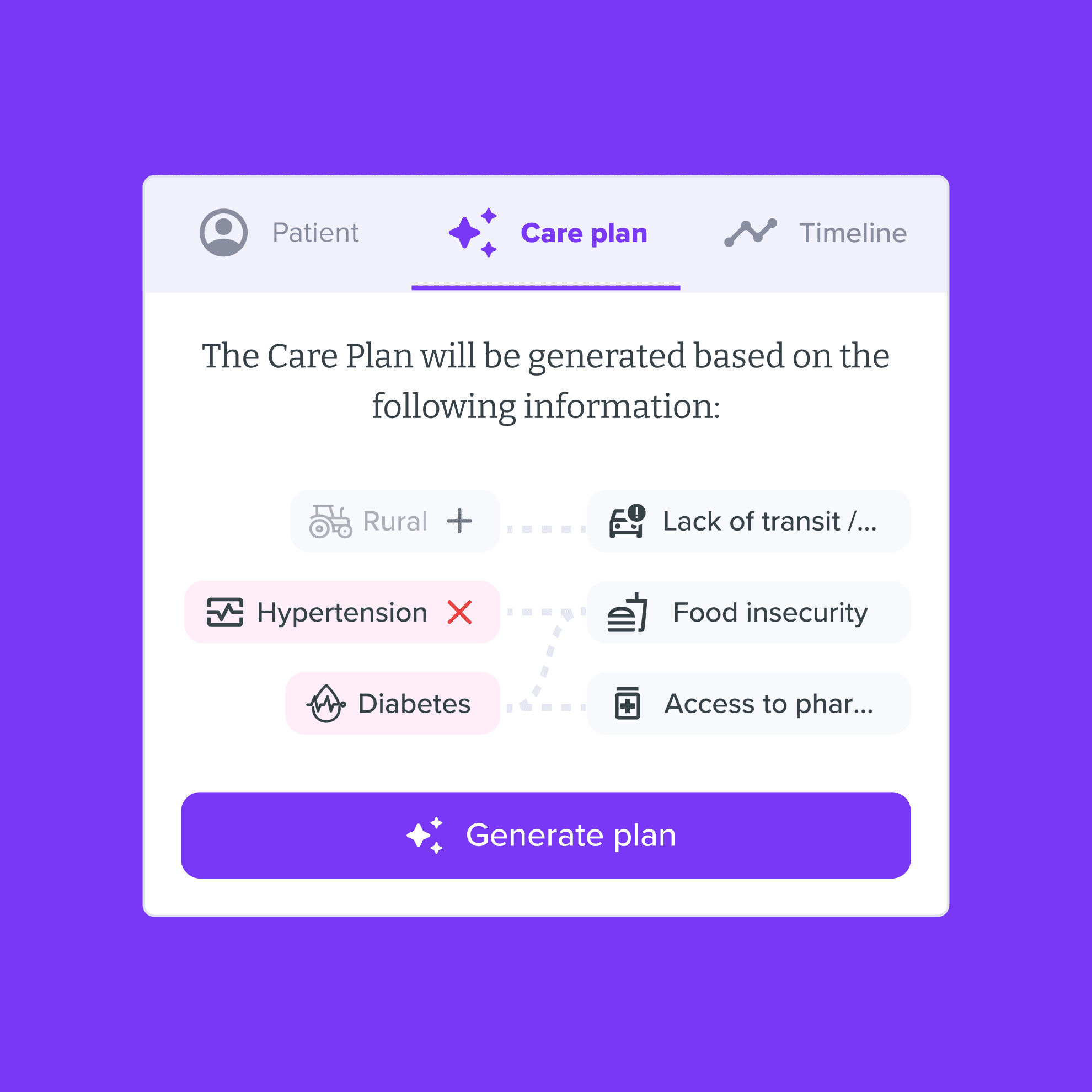

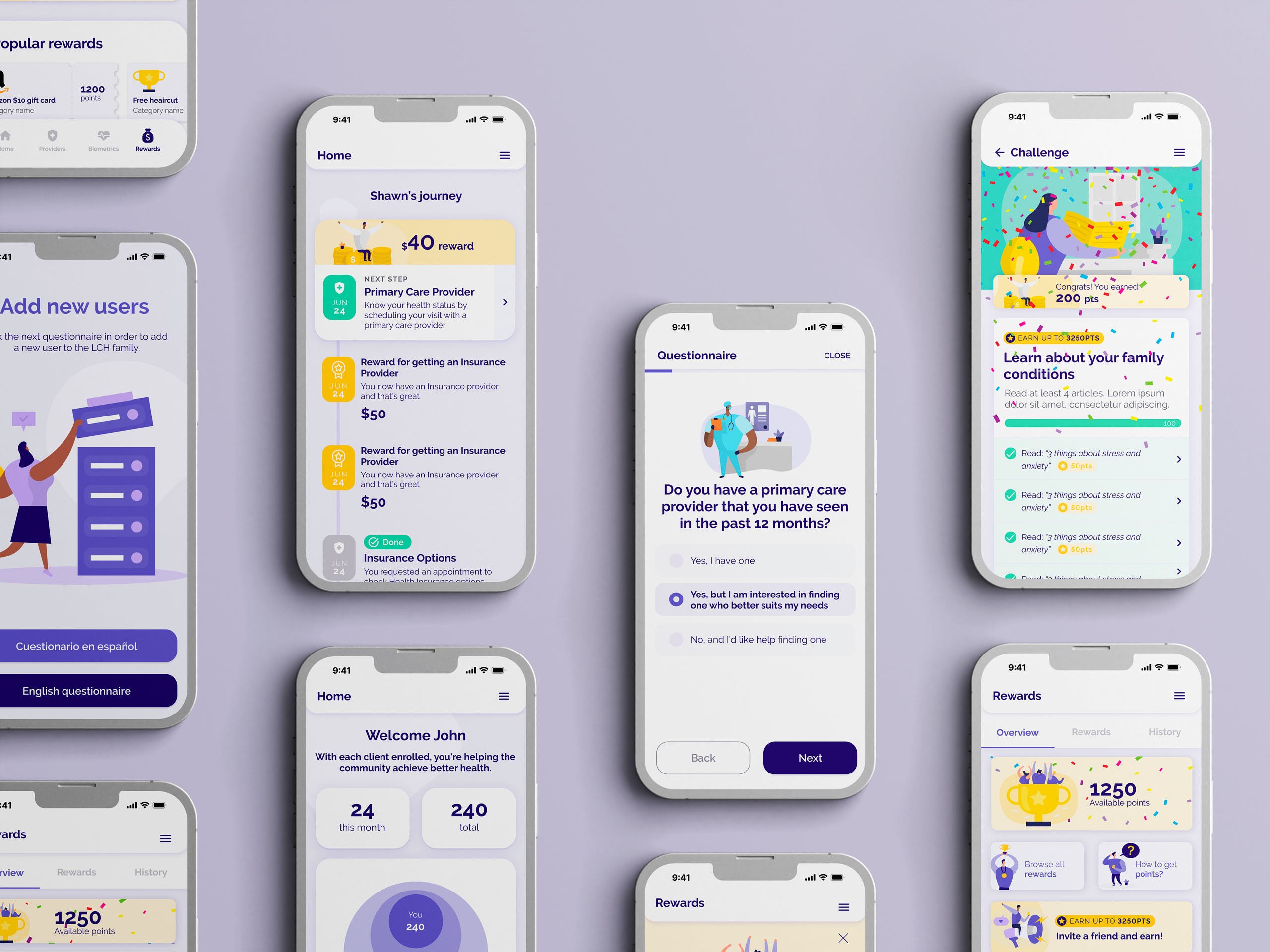

Arionkoder’s product team focused on enhancing the Social Worker and Care Manager journeys to boost product adoption. Collaborative sketching sessions fostered transparency in design decisions, driving valuable insights.

We integrated AI-focused design patterns to refine the User Interface and crafted the User Experience for both the Social Worker app and the Epic/Salesforce widget.

The final deliverable included a high-fidelity Figma prototype with a prioritized, well-documented feature list, ready for implementation.

Healthcare

Design

Team

Product Manager & Facilitator

UX/UI Designer

Delivery

Key Insights

UX/UI design

Our Process focused on user research and the care navigator's journey

One of the things that differentiates us is that we get deep into the problem with desk research, interviews, and mapping activities to better diagnose a problem before jumping into solutions.

We have learned this enables us to propose a more robust action plan, and although it can feel too exhaustive in the initial stages to our customers, they end up appreciating the process because this helps them to reflect on what they are doing and the way they are doing it. What is more: after the process finishes, they end up having a much better-equipped thinking partner from our side, that can connect the dots between technological capabilities, UI ideas, end-user needs, and the contextual constraints they have.

Luckily, the Alvee team, led by Nicole Cook, was already fully into this mindset and they shared with us a lot of materials to process and understand. They have already conducted academic research on the Social Determinants of Health (SDoH) area, so there were even interview transcripts to learn from. We went through all of this with a customized Design Sprint for AI-driven products.

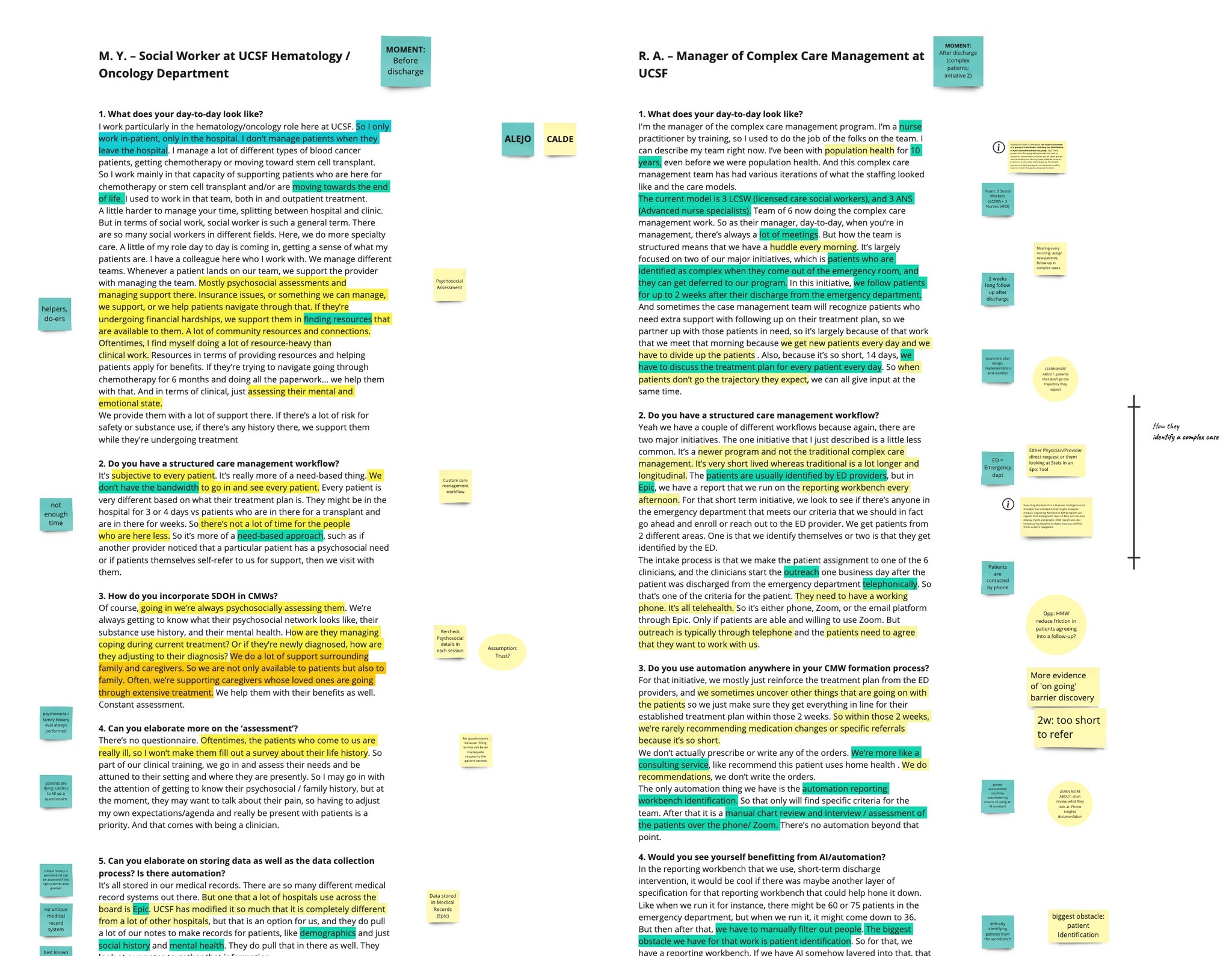

Figure 1: processing interviews from Previous Research to identify different workflows

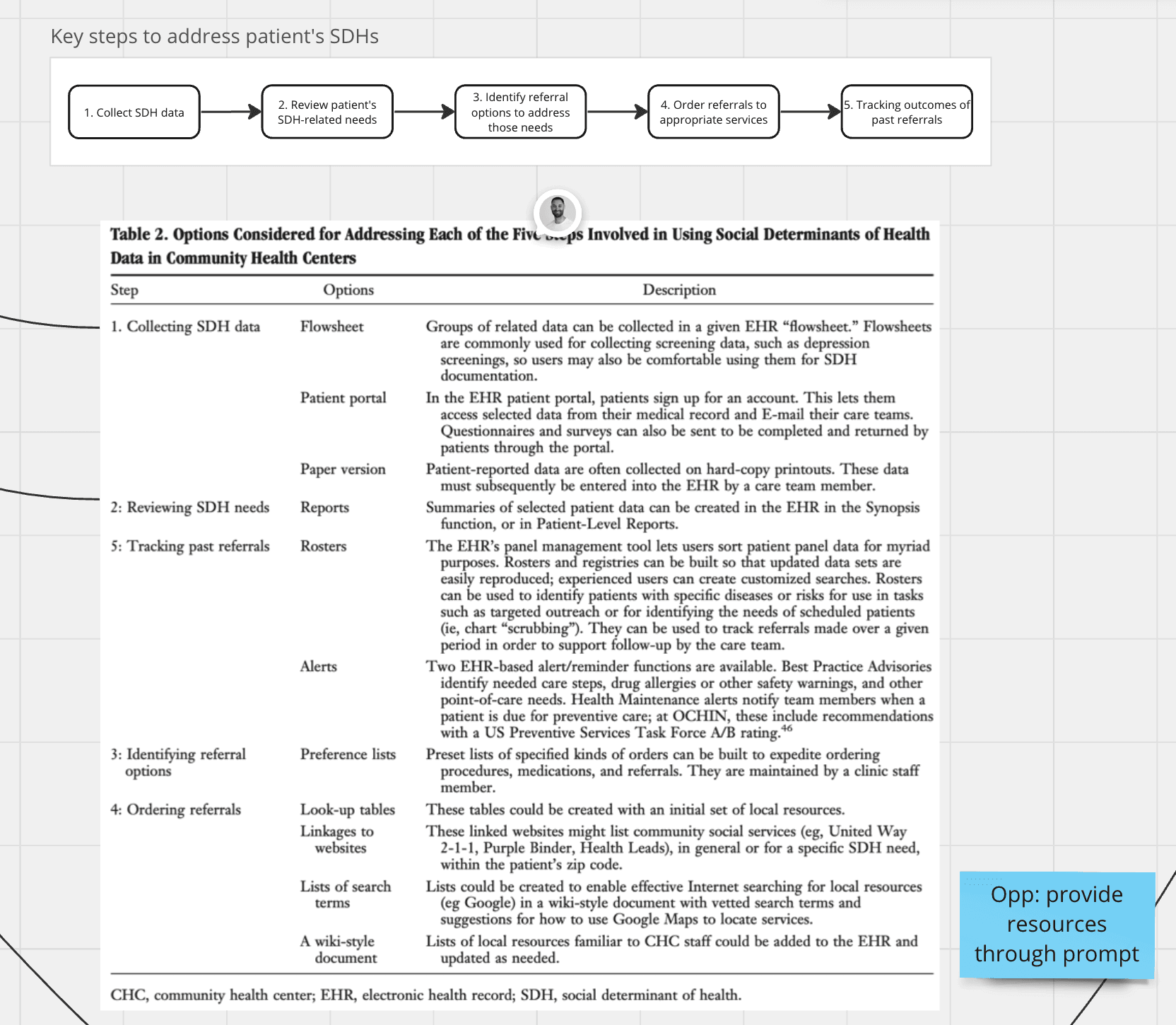

Figure 2: Processing a Paper we found related to Social Workers' workflows.

The ML Experts participated and proposed ideas visually. We brought some examples related to emerging interaction patterns in the field of AI, and we introduced to importance of remixing ideas from others. This was key for leveling the field of all participants and contributed to exploring a myriad of ideas to select from.

Designing AI-based products with LLMs and generative capabilities.

As we have been talking about in our podcast, and in some other articles, designing AI products requires new skills, new design patterns to consider, and new collaborators to include. It’s also important to understand that as a new technology, its introduction to end-users needs extra design care: most of them probably don’t know how it works and won’t care for understanding the details, so if we don’t manage the users' expectations through communication and design, we can end up either disappointing them or scaring them away.

Let’s go through some examples of how this project deals with this:

Getting help from the experts to shape the content

“Everybody was really invested in Alvee, in our company and our mission. The team really bought into our company, our mission, they truly cared about the outcomes. And it was pretty evident. I have worked with other development groups in the past, and I can say pretty positively that the Arionkoder team was far above some other groups I’ve worked with before.”

Nicole Cook

Founder @ Alvee

Transformation

takeaways

In our work with Alvee, we tailored the platform to support Social Workers across diverse care settings, from outpatient and inpatient to emergency and chronic treatment environments.

We designed with the unique dynamics between Social Workers, Physicians, and Practices in mind, creating a user experience that respects these relationships.

Recognizing the growing role of Social Determinants of Health, we aligned the platform with U.S. health initiatives, promoting a more holistic approach to patient care.